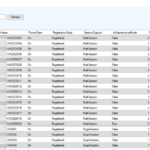

Update 10/29/19: Added search (username/userfullname) to the Sessions tab. Studio always seems extremely sluggish to me when trying to navigate between different areas. I’m always waiting a few seconds for the refresh circle to go away every single time I navigate somewhere new. Most of the time I just need to put a machine in/out […]