Introdution

The Citrix WAN optimization policy (or “low bandwidth” policy) aims to compress and reduce the bandwidth used by the ICA protocol by lowering the visual quality for users with slow and unreliable connections. This article will benchmark the best possible configuration for the low bandwidth policy in a Citrix 7.15 LTSR CU3 environment.

Testing Protocol

In order to determine the most efficient WAN optimization policy in the Citrix environment (7.15 LTSR CU3), a benchmark tool will be used to execute a predefined set of actions for accurate data collection across the different tests run. The tool used for this documentation is PCMARK10 on Windows 2016. The test is composed with two specific configurations:

Web (Browsing + Multimedia): automated testing of HTML5 content rendered in a web browser, including video playback and rich media web browsing.

Office (Writing + Spreadsheets): automated testing of documents writing, with text typing simulation, images and text blocks pasting, pages scrolling, spreadsheets generation with large number of cells and graphics.

Web Browsing and Office tests are executed separately to measure the encoder performance in each context (multimedia or text).

Default configuration baseline

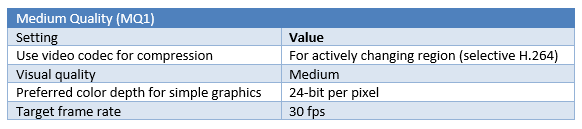

The ICA protocol configuration baseline for remote access by default is set to medium quality.

Specific settings excluded from configuration

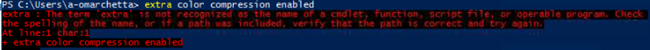

Enable Extra Color Compression:

This setting will add extra picture compression at the expense of visually degraded quality. The measurements during the benchmark showed that this option added an interesting gain in term of bandwidth reduction, but the visual impact, especially on text, was not negligible. As you can see on the picture below, some text outputs are blurry and difficult to read.

This option will be removed from the scope of the low bandwidth policy.

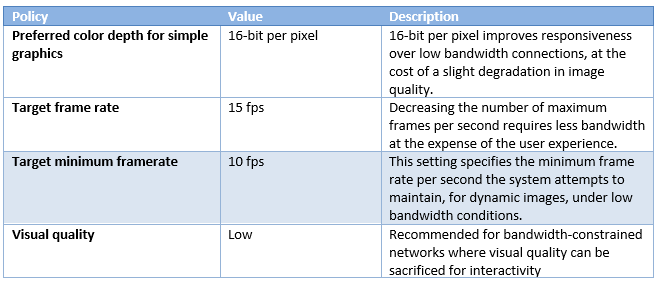

Target Minimum Frame Rate

The “Target minimum Frame Rate” setting is associated with the legacy mode (Adaptive Display or Progressive display configuration) but still referenced in 7.15 LTSR when using the compatibility mode. It is not clear how this setting is influencing the bandwidth compression when reaching low FPS and will not be included in the benchmark. The default value of 10 fps is used in all configurations.

Testing parameters

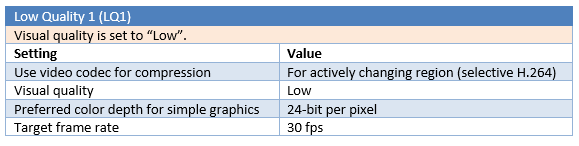

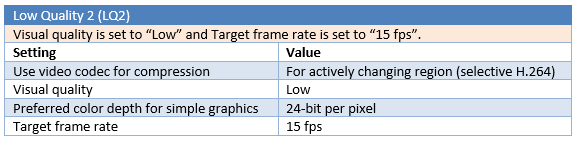

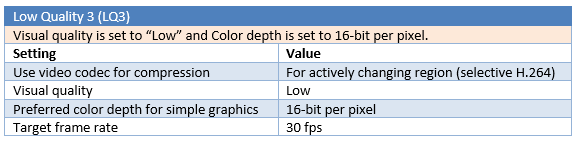

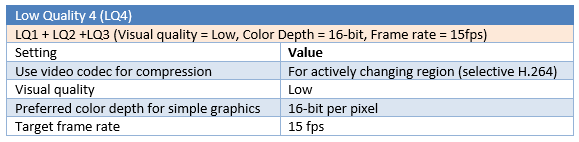

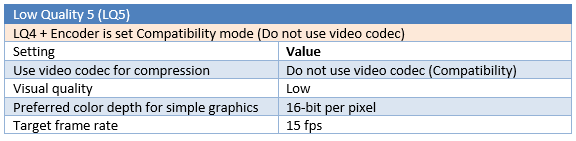

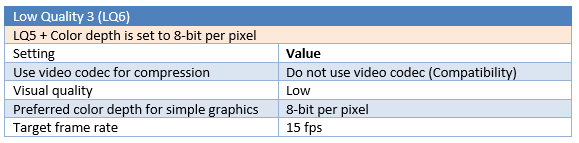

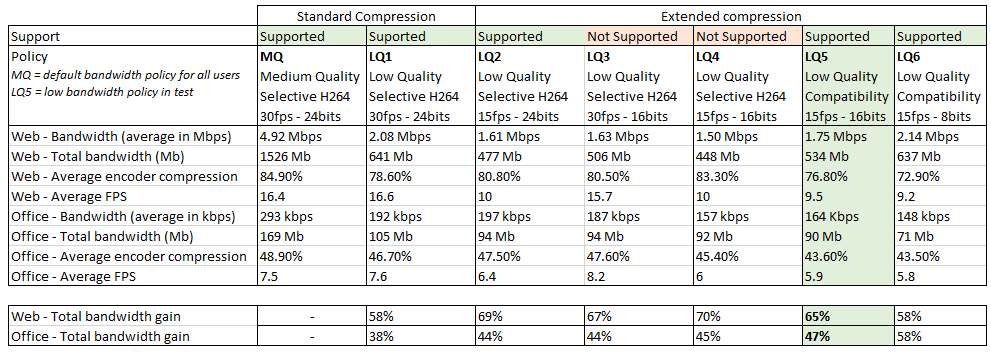

Different parameters are tested for a total number of six “Low Quality” tests (LQ1 to LQ6).

Test results

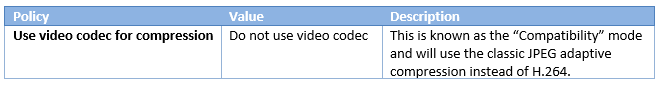

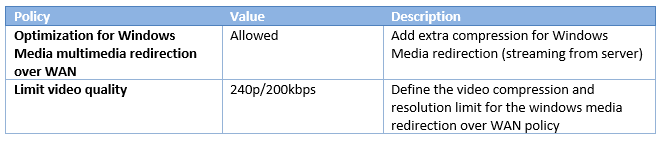

LQ5 is the most efficient configuration in this benchmark, with 65% gain in multimedia and web browsing testing and 47% gain in office and text testing, compared to the standard medium quality (MQ), and without noticeable compression artefacts and pixelisation. The use of selective H.264 encoding (LQ1 to LQ4) is slightly more efficient for web and multimedia activities, but will give less gain in office and text editing activities, and will add noticeable compression artefacts. For office and text editing, the “Compatibility mode” in LQ5, which use a traditional JPEG compression, is more stable (less artefacts) and more efficient for compression in this scenario. The 8-bit mode is interesting for office and text bandwidth compression but offers poor performances with web browsing and multimedia, and will substantially degrade the user experience in this mode.

WAN optimization user policy settings

The settings used in the policy are detailed below:

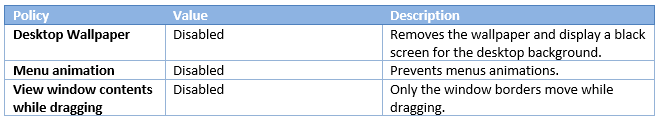

Desktop UI

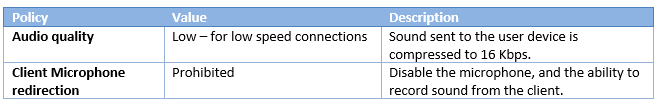

Audio

Graphics

Visual Display

Multimedia (redirection)

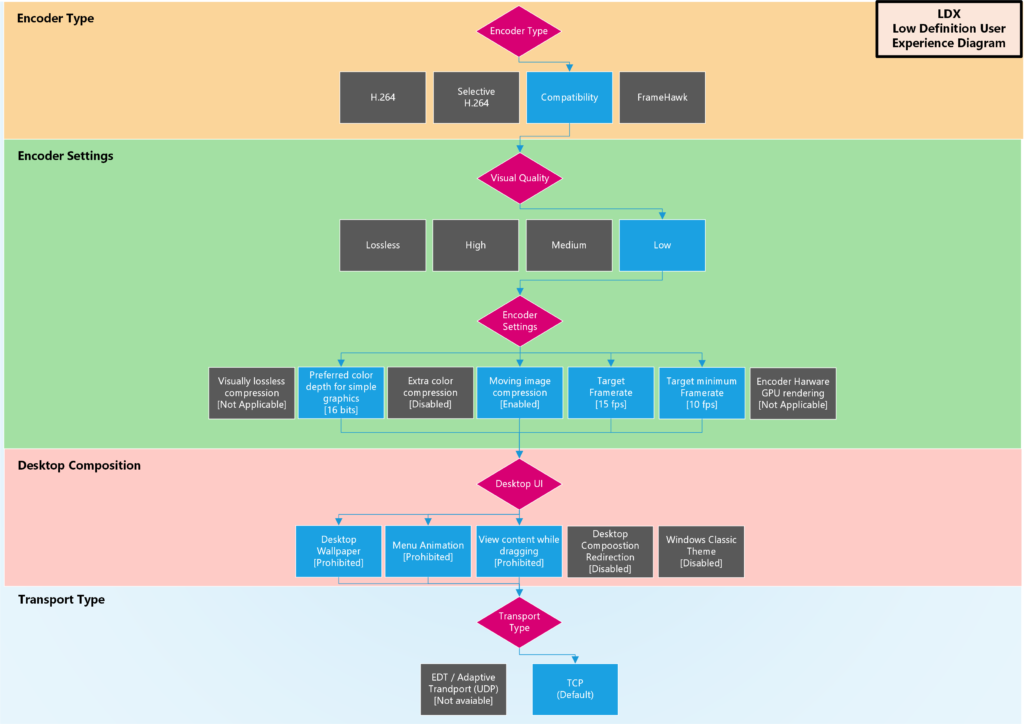

Low Bandwidth Policy Diagram