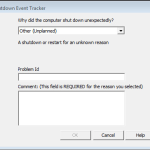

I’m sure we are all familiar with the Shutdown Event Tracker. Hypervisor crash for “no reason”? Have a bunch of servers power down hard “by accident”? It happens to all of us. What’s annoying about this, specifically in a XenApp/RDS environment is the fact that when a regular user logs in they will see […]